Contextual Retrieval - A Research by Antropic

Have you ever asked an AI a question and felt like it completely missed the point? You’re not alone. Traditional AI search methods often struggle to find the most relevant information, leading to frustrating or just plain wrong answers. But what if AI could actually “understand” context better? Enter Contextual Retrieval—a game-changer in the world of AI-powered search, introduced by the team at Anthropic.

Context is everything when it comes to understanding and responding to complex queries. Without it, even the most advanced AI can stumble, providing answers that are technically correct but miss the mark in terms of relevance. Contextual Retrieval aims to bridge that gap, making AI systems more intuitive and effective

So, what’s the big deal? Let's dive in.

What is Contextual Retrieval?

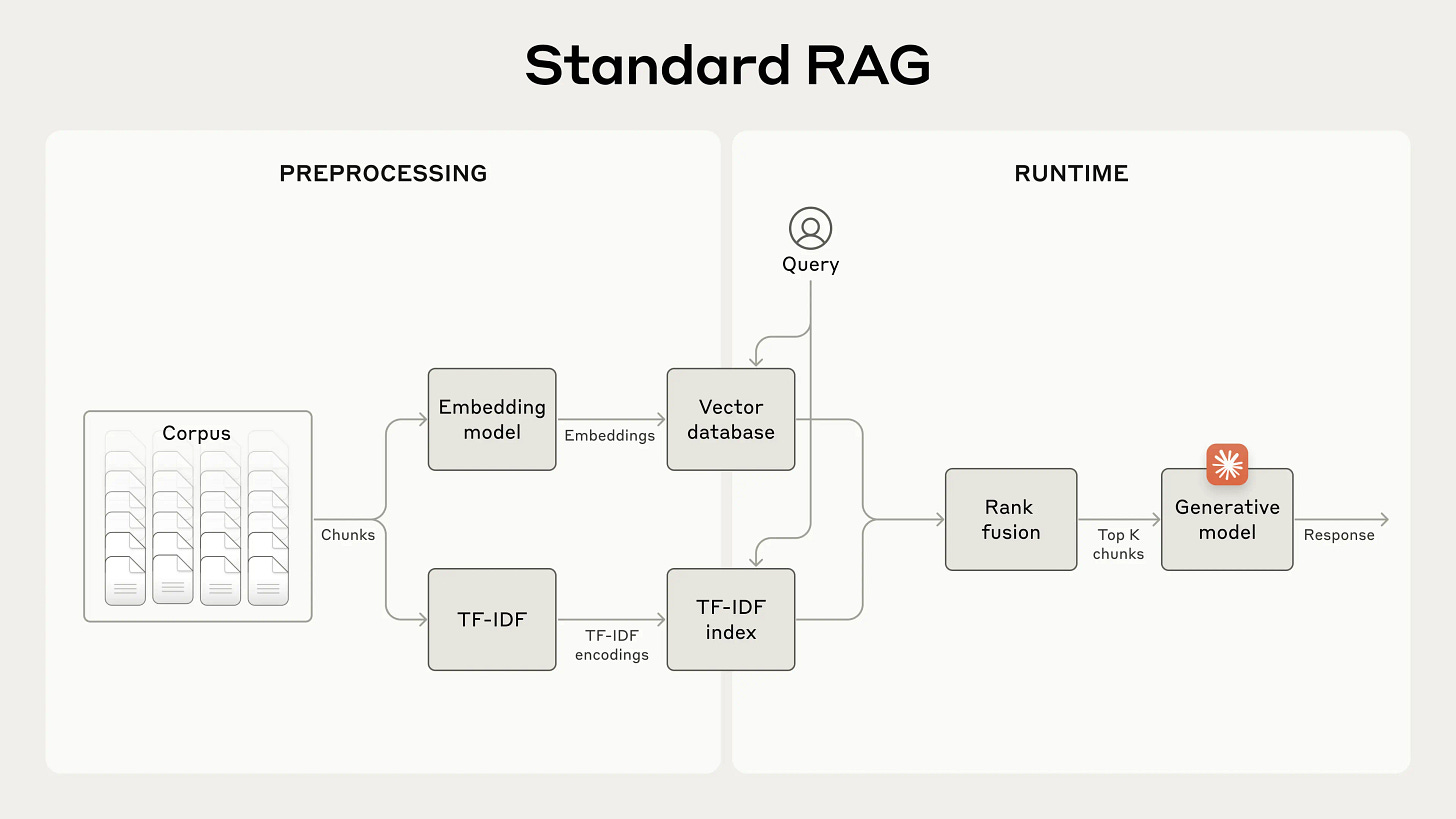

First things first, what exactly is Contextual Retrieval? To understand it, we need to take a quick look at Retrieval-Augmented Generation, or RAG for short. RAG is a type of AI system that combines two powerful capabilities: information retrieval and language generation. Think of it as a super-smart librarian who not only finds the right books for your query but also summarizes them in a way that directly answers your question. It’s like having a conversation with an expert who knows exactly where to look and how to explain things.

RAG systems have been a game-changer in AI, allowing models to access vast amounts of information without having to memorize it all. Instead, they retrieve relevant documents or text chunks on the fly and use that information to generate responses. This makes them incredibly versatile and up-to-date, as they can pull from the latest data. However, the challenge has always been ensuring that the retrieved information is not just relevant but also contextually appropriate.

Here’s where Contextual Retrieval comes in, Sometimes, even the best RAG systems can miss the mark. Imagine asking about the impact of AI on healthcare, and the system pulls up a chunk of text about AI in general without specifying the healthcare context. It’s relevant, but it lacks the specific context you need. Contextual Retrieval is like giving your AI a pair of glasses to see the finer details and understand the full story. It enhances RAG systems by making the retrieved information more contextually aware, ensuring that the answers you get are not just accurate but also deeply relevant.

How Does Contextual Retrieval Work?

So, how does Contextual Retrieval actually work? It’s a two-step process that enhances the way information is retrieved and understood. Let’s break it down.

Step 1: Creating Contextualized Chunks

In traditional RAG systems, text is broken down into chunks—small pieces of information—and the system retrieves the most relevant ones based on your query. But here’s the catch: sometimes these chunks don’t have enough context on their own to be truly useful. Contextual Retrieval solves this by adding a short, descriptive summary to each chunk. This summary acts like a mini-introduction, explaining what the chunk is about and why it matters.

For example, let’s say you have a chunk of text that reads, "AI models require rigorous testing to ensure they don’t produce harmful outputs." On its own, that’s useful, but it doesn’t tell the full story. Contextual Retrieval might add a summary like, "This section discusses the importance of safety protocols in AI development, focusing on testing methodologies to prevent unintended consequences." Now, the system has a clearer idea of how this chunk relates to queries about AI safety.

Think of it like searching for a recipe online. If you type "chocolate cake," you might get a ton of results—some fancy, some simple. But if you add context, like "chocolate cake for beginners," you’re more likely to find recipes that match your skill level. These contextual summaries do the same thing for AI, helping it zero in on the most relevant chunks.

Step 2: Using a Fine-Tuned Embedding Model

Next up is the techy part: embeddings. Embeddings are like information encoded in numbers of the text—they capture its meaning in a way machines can understand. In standard RAG systems, embeddings help the AI find chunks that are similar to your query. But Contextual Retrieval takes it a step further by using a fine-tuned embedding model that’s specially trained to work with these contextualized chunks.

This fine-tuning means the model doesn’t just look at the raw text—it also considers the added context from the summaries. The result? It’s way better at retrieving the exact chunks that answer your question. Imagine training a dog to fetch. A regular dog might grab any stick, but a well-trained one brings back the exact one you threw. That’s what this fine-tuned model does—it fetches the right stuff with precision.

Together, these two steps—contextualized chunks and a fine-tuned embedding model—make Contextual Retrieval a powerful upgrade to how AI systems handle information.

Why Should You Care About Contextual Retrieval?

Alright, let’s talk benefits. Why does Contextual Retrieval matter to someone like you, who’s keen to understand AI in detail? Well, the perks are pretty impressive.

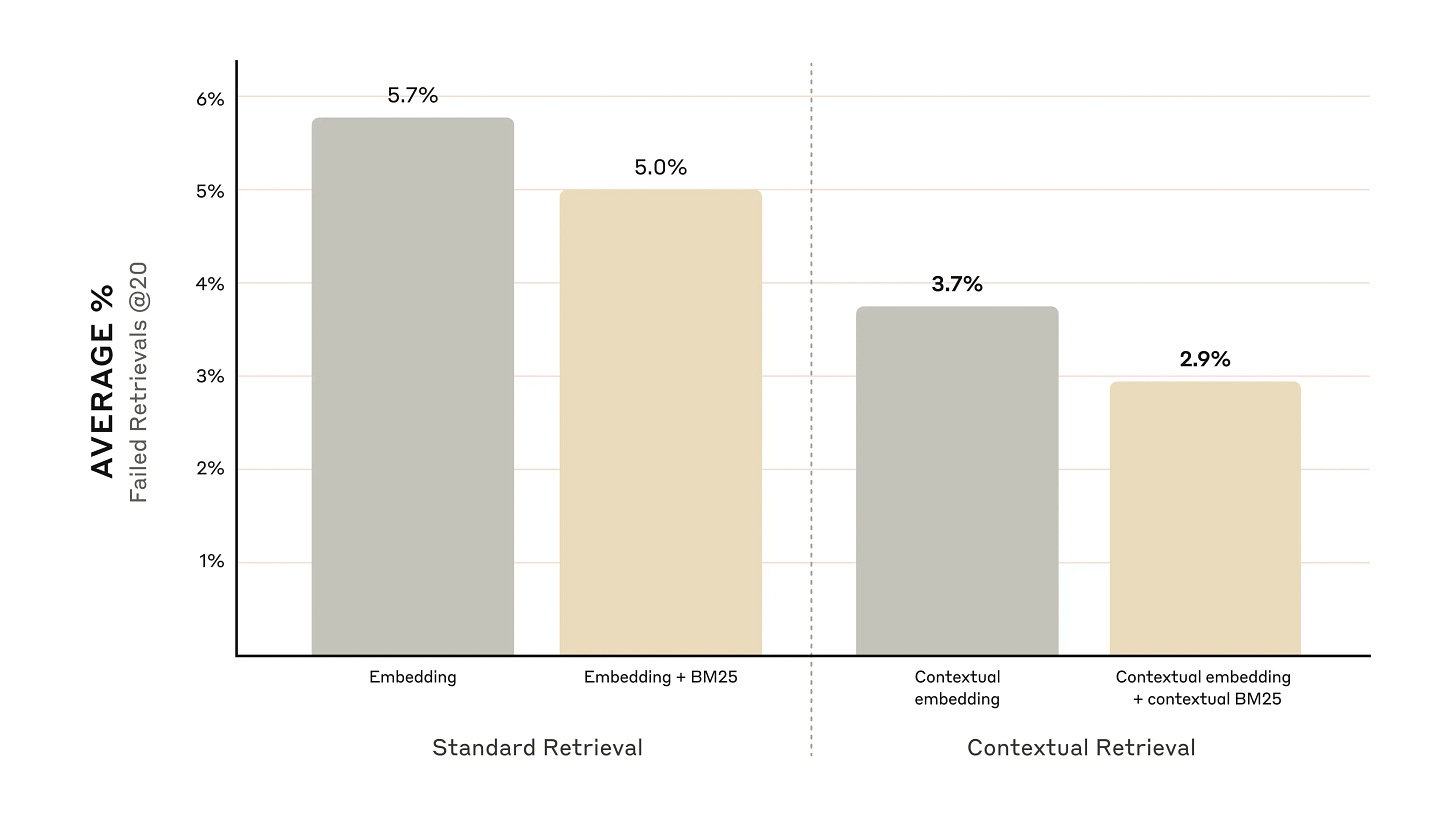

In Anthropic’s tests, Contextual Retrieval reduced the failure rate of RAG systems by up to 49%. Let’s break that down. If a standard RAG system got the answer wrong 15% of the time, Contextual Retrieval slashes that to about 7.5%. That’s a huge leap—essentially doubling the AI’s reliability. For you, that means fewer frustrating moments where the AI misses the point of your question.

It also boosts precision and recall. Precision is about how many of the retrieved chunks are actually relevant, while recall is how many of the relevant chunks it manages to grab out of everything available. Contextual Retrieval improves both, so you get more of the good stuff and less random noise. Imagine asking about the ethics of AI in hiring— вместо getting a mix of vaguely related bits, you’d get spot-on info about ethics in that specific context.

Plus, it’s a champ at handling tricky questions. Ever asked something where the answer isn’t in one neat package but scattered across multiple places? Contextual Retrieval helps the AI connect the dots. For example, if you’re digging into the history of AI, it could link milestones across different chunks—like how early neural networks led to today’s models—giving you a fuller, richer answer.

What’s the Catch?

No tech is perfect, right? Let’s talk about the challenges and limitations of Contextual Retrieval.

One biggie is computational cost. Creating summaries for tons of text chunks can hog resources, especially if you need answers fast in real-time. But here’s the workaround: if your data isn’t changing—like a library of articles—you can pre-compute everything. Do the heavy lifting once, store it, and queries zip along later.

Another hiccup is the language model’s quality. Since those summaries come from a model like Claude, if it misreads a chunk—say, tagging something as healthcare-related when it’s about finance—the retrieval could go off track. The fix? Use a top-notch model and maybe double-check summaries for big projects. As models get smarter, this issue should shrink, too.

Wrapping It Up

There you go—Contextual Retrieval in all! This Anthropic gem is making AI smarter and more tuned-in to context, paving the way for cooler, more advanced tools. Whether you’re a coder dreaming up the next big AI thing or just here to geek out, this is worth watching.

Want more? Swing by Anthropic’s full article for more details.

And that’s a wrap for this edition! Stay tuned for more updates in the next newsletter. Until then, take care and stay curious!